Overview

Abstract

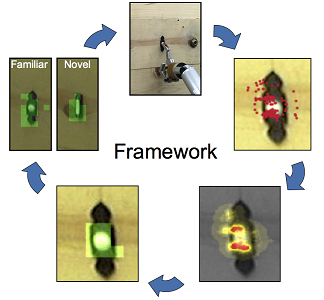

This project produced a large-scale experimental study, in which a humanoid robot learned to press and detect doorbell buttons autonomously. The models for action selection and visual detection were grounded in the robot's sensorimotor experience and learned without human intervention. Experiments were performed with seven doorbell buttons, which provided auditory feedback when pressed. The robot learned to predict the locations of the functional components of each button accurately. The trained visual model was also able to detect the functional components of novel buttons.Paper

Sukhoy, V. and Stoytchev, A., "Learning to Detect the Functional Components of Doorbell Buttons Using Active Exploration and Multimodal Correlation," In Proceedings of the 2010 IEEE International Conference on Humanoid Robots (Humanoids), Nashville, TN, pp. 572–579, December 6–8, 2010. PDF.

Click on a stage in the framework to view the video that describes it.

BibTeX Snippet

@InProceedings{sukhoy2010Humanoids,

author = {V. Sukhoy and A. Stoytchev},

title = {Learning to Detect the Functional

Components of Doorbell Buttons

Using Active Exploration and Multimodal Correlation},

booktitle = {In Proceedings of the 2010

IEEE-RSJ Conference on Humanoid Robots},

year = {2010},

pages = {572-579}

address = {Nashville, TN},

month = {December}

}

Earlier Papers

- Sukhoy, V., Sinapov, J., Wu, L., and Stoytchev, A.,

"Learning to Press Doorbell Buttons, "

In Proceedings of the 9th IEEE International Conference on Development and Learning

(ICDL), Ann Arbor, MI, pp. 132–139, August 18–21, 2010.

PDF.

- Wu, L., Sukhoy, V., and Stoytchev, A., "Toward Learning to Press Doorbell Buttons," In Proceedings of the 24th National Conference on Artificial Intelligence (AAAI), Atlanta, GA, July 11–15, pp. 1965–1966, 2010. PDF.